The Observability Stack for Production Multi-Agent Systems

AI ArchitectureJanuary 27, 20266 min read

The Observability Stack for Production Multi-Agent Systems

Gartner predicts 60% of AI deployments will fail without proper observability by 2027. Learn the tools and patterns that separate production systems from POCs.

TL;DR: Production multi-agent systems require a comprehensive observability stack covering metrics, traces, logs, and evaluations. LangSmith leads for LangGraph users, W&B Weave excels at multi-agent visualization, and Langfuse offers open-source flexibility. Without proper observability, Gartner predicts 60% of AI agent deployments will fail by 2027.

Why Observability is Non-Negotiable in 2026

The shift from chatbots to autonomous agents has created a visibility crisis. When a single LLM call fails, you can debug it. When a five-agent orchestration fails at 3 AM, you need more than hope.

According to Gartner's predictions, 60% of AI agent deployments will fail without proper observability by 2027. This isn't about nice-to-have dashboards—it's about knowing when your system is degrading before your users do.

The observability challenge in multi-agent systems is fundamentally different from traditional software:

| Traditional Software | Multi-Agent Systems |

|---|---|

| Deterministic flows | Probabilistic behavior |

| Predictable costs | Variable token usage |

| Binary success/failure | Quality gradients |

| Static debugging | Dynamic reasoning chains |

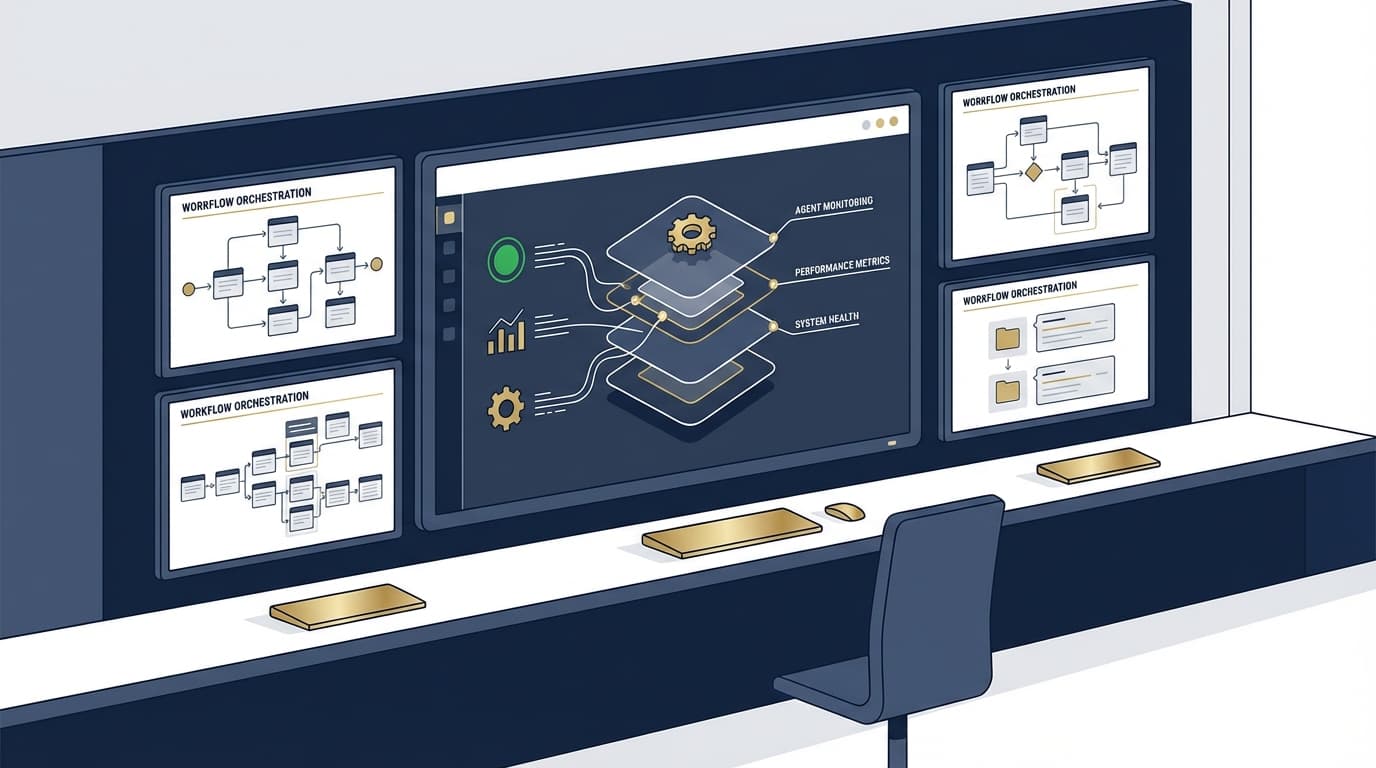

What is the Observability Stack for AI Agents?

The modern AI observability stack has four layers:

Each layer answers different questions:

- Metrics: Is the system healthy? How much is it costing?

- Traces: What happened in this specific request?

- Logs: What decisions did the agent make and why?

- Evaluations: Is the output quality acceptable?

How Do the Major Observability Tools Compare?

LangSmith (LangChain)

LangSmith is the first-party observability platform for LangChain and LangGraph users. Its biggest selling point: zero additional overhead on traces.

Best for: Teams standardized on LangChain/LangGraph

Key features:

- Automatic trace capture for LangGraph

- Prompt versioning and A/B testing

- Built-in evaluation datasets

- Annotation queues for human review

Pricing: Free tier → Usage-based

# LangSmith integration is automatic with LangGraph

from langsmith import traceable

@traceable(name="agent_call")

def my_agent(query: str) -> str:

# Agent logic here

return response

Weights & Biases Weave

W&B Weave extends the ML-native observability platform to agents. It excels at multi-agent system visualization where you need to see how multiple agents interact.

Best for: ML teams, complex multi-agent research

Key features:

- Multi-agent trace visualization

- Experiment comparison

- Model registry integration

- Custom dashboards

Pricing: Free tier → Team → Enterprise

Langfuse (Open Source)

Langfuse is the open-source alternative that you can self-host. If you have privacy requirements or want to control costs at scale, it's worth evaluating.

Best for: Cost-conscious teams, privacy requirements, open-source preference

Key features:

- Self-hosted option

- Framework agnostic

- Cost tracking built-in

- Growing community

Pricing: Free (self-hosted) → Cloud pricing

OpenTelemetry

OpenTelemetry is the vendor-neutral standard. While it requires more setup, it integrates with existing APM tools and gives you maximum flexibility.

Best for: Teams with existing observability, multi-cloud environments

Key features:

- Industry standard format

- Integrates with DataDog, Grafana, etc.

- No vendor lock-in

- Future-proof

Which Metrics Should You Track for AI Agents?

Latency Metrics

| Metric | Description | Target |

|---|---|---|

| p50 latency | Median response time | Under 2s |

| p95 latency | 95th percentile | Under 5s |

| p99 latency | Worst case | Under 10s |

| Time to first token | Streaming start | Under 500ms |

Cost Metrics

| Metric | Description | Alert Threshold |

|---|---|---|

| Cost per query | Average token cost | >$0.10 |

| Daily spend | Total daily cost | Budget limit |

| Token waste | Unused context | >30% |

Quality Metrics

| Metric | Description | Target |

|---|---|---|

| Success rate | Queries completed | >99% |

| Relevance score | Answer quality | >0.85 |

| Faithfulness | Grounded in context | >0.9 |

| User satisfaction | Thumbs up/down | >80% |

How Should You Choose an Observability Tool?

Use this decision framework:

What Are the Production Checklist Items for AI Observability?

Before Launch

- Tracing enabled for all agents

- Token tracking configured

- Cost alerts set up

- Error alerting configured

- Baseline metrics captured

- Evaluation datasets created

Ongoing Operations

- Daily cost review

- Weekly quality audits

- Monthly optimization review

- Quarterly benchmark comparison

Frequently Asked Questions

What is AI agent observability?

AI agent observability is the practice of monitoring, tracing, and evaluating autonomous AI systems in production. Unlike traditional software monitoring, it must handle probabilistic behavior, variable costs, and quality gradients rather than binary success/failure metrics.

Which observability tool is best for LangGraph?

LangSmith is the best observability tool for LangGraph users because it provides zero-overhead automatic tracing, deep integration with the LangChain ecosystem, and built-in evaluation capabilities. It's the first-party solution designed specifically for this framework.

How much does AI observability cost?

AI observability costs vary by tool and scale. LangSmith and Langfuse offer free tiers for small projects. At scale, expect to spend $100-1000/month depending on trace volume. Self-hosting Langfuse eliminates SaaS costs but adds infrastructure overhead.

Can I use OpenTelemetry for AI agents?

Yes, OpenTelemetry is increasingly used for AI agent observability. It provides a vendor-neutral standard that integrates with existing APM tools like DataDog and Grafana. The trade-off is more setup compared to purpose-built tools like LangSmith.

What metrics matter most for production AI agents?

The most critical metrics for production AI agents are: p95 latency (user experience), cost per query (economics), success rate (reliability), and quality scores (value delivery). Cost tracking is especially important since LLM costs can spiral without visibility.

Key Takeaways

- 60% of AI deployments will fail without observability by 2027 (Gartner)

- LangSmith is the default for LangGraph users with zero-overhead tracing

- Langfuse offers open-source flexibility for cost-conscious or privacy-focused teams

- Track latency, cost, and quality—not just success/failure

- Start observability on day one, not after your first production incident

Related Resources

- AI Architecture Hub - Patterns and blueprints for production AI

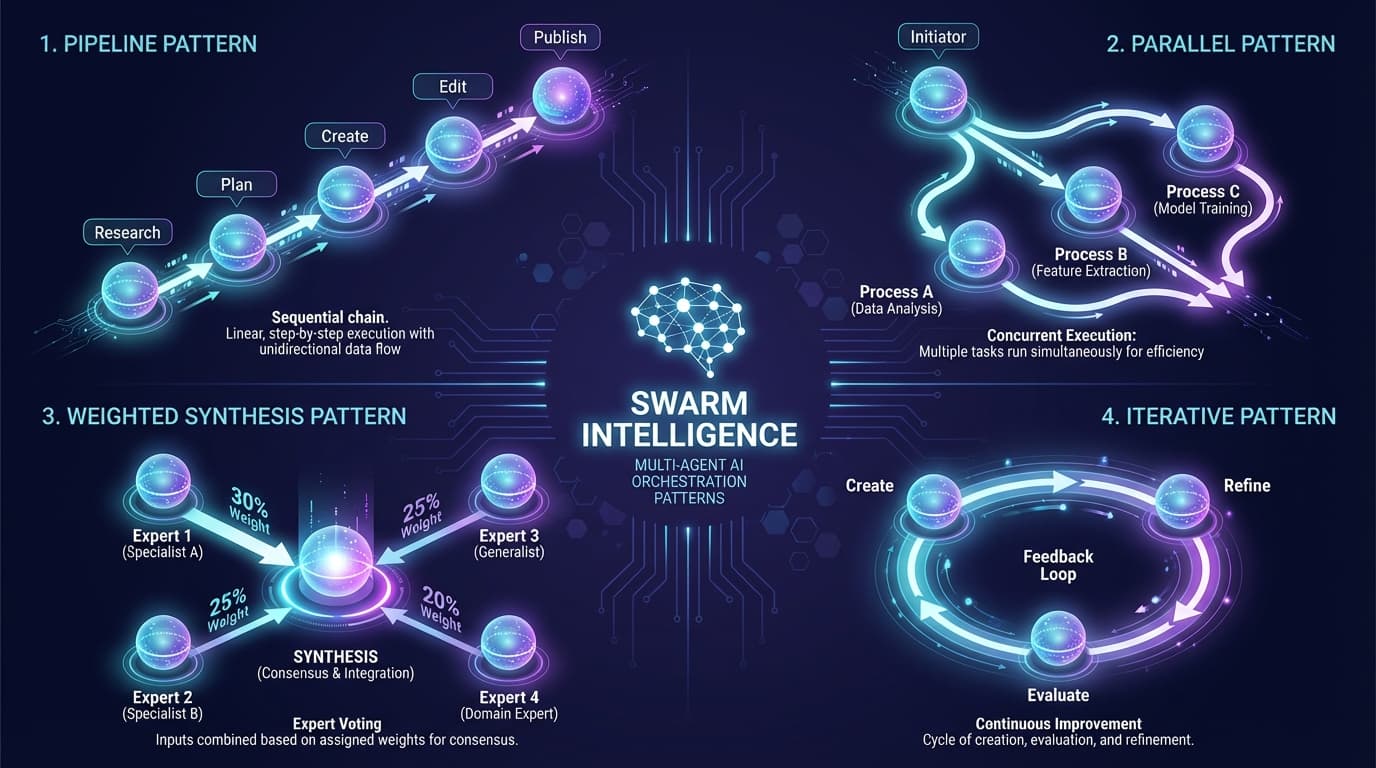

- Multi-Agent Orchestration Patterns 2026 - How agents coordinate

- Research: MCP Ecosystem Analysis - Tool integration patterns

Built with research from the FrankX Research Intelligence layer. All statistics validated with multiple sources.

Related Research

Read on FrankX.AI — AI Architecture, Music & Creator Intelligence

Weekly Intelligence

Stay in the intelligence loop

Join 1,000+ creators and architects receiving weekly field notes on AI systems, production patterns, and builder strategy.

No spam. Unsubscribe anytime.