Production LLMs & AI Agents on OCI: Part 1 - The Six-Plane Enterprise Architecture

Enterprise AIJanuary 21, 202618 min read

Production LLMs & AI Agents on OCI: Part 1 - The Six-Plane Enterprise Architecture

The complete enterprise architecture blueprint for deploying production-grade LLM and agentic AI systems on Oracle Cloud Infrastructure. Six architectural planes, OCI service mapping, and the decision framework that gets you from demo to deployment.

Production LLMs and AI Agents on OCI: The Six-Plane Enterprise Architecture

TL;DR: Most GenAI initiatives stall because teams build the model layer and neglect the other five planes that make production possible. This blueprint maps enterprise architecture requirements to OCI services across six planes—from identity to observability—giving you the complete stack that survives compliance reviews and 3 AM incidents.

Why Architecture Matters More Than Models

Every enterprise I work with has built an LLM demo in a week.

Most are still running that same demo six months later.

The bottleneck isn't the model. It's everything around it:

The LLM is a dependency. The system is a product.

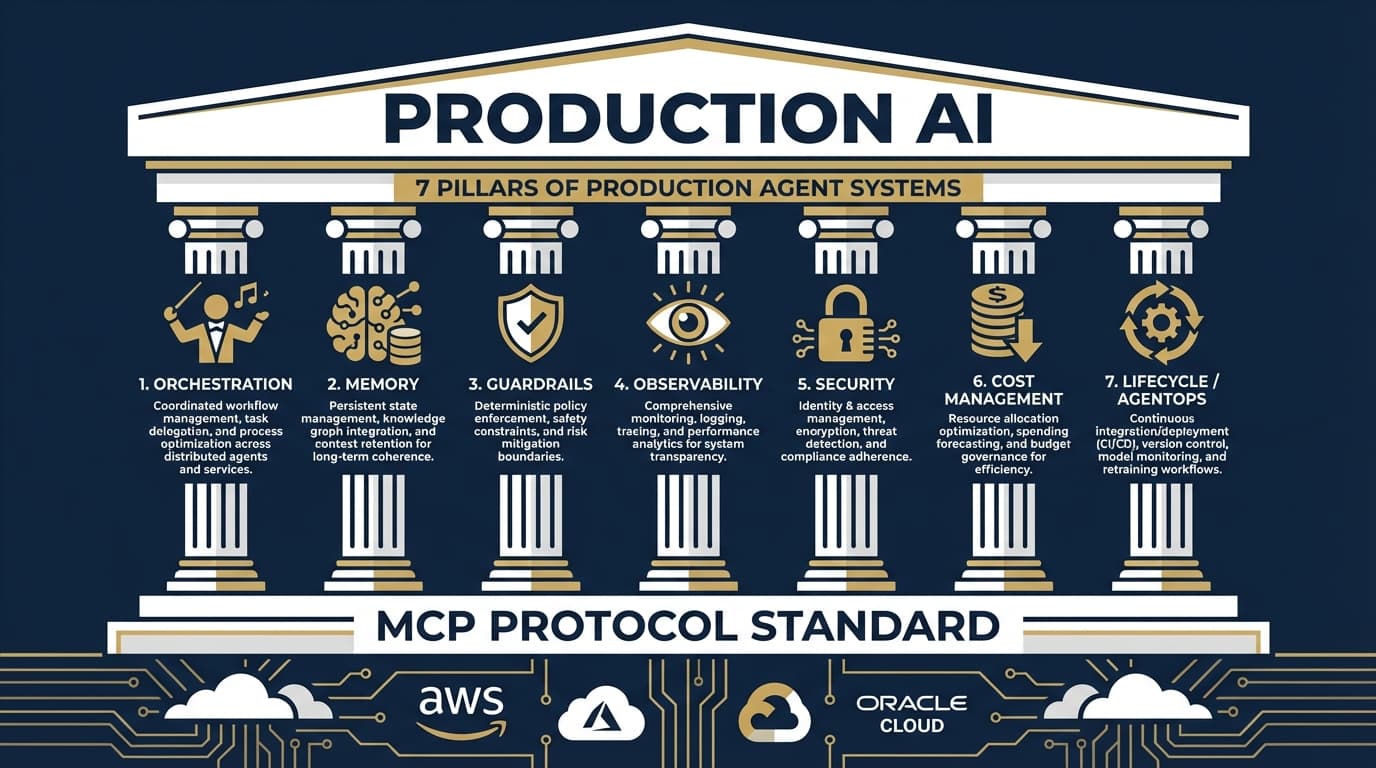

The Six-Plane Enterprise Architecture

This framework organizes production GenAI systems into six architectural planes. Each plane has:

- Clear responsibility boundaries

- Dedicated OCI services

- Specific governance controls

- Failure isolation

Plane 1: Experience Plane

Responsibility: User-facing interfaces that consume AI capabilities

Key Characteristics:

- Must handle streaming responses gracefully

- Show intermediate steps for agentic workflows

- Support human-in-the-loop approval flows

- Adapt to tool outputs (tables, charts, forms)

OCI Service Mapping:

| Component | OCI Service | Alternative |

|---|---|---|

| Web/Mobile UI | OCI APEX | Custom React/Next.js on OKE |

| Chat Interface | Digital Assistant | Custom chat on OCI Functions |

| Admin Console | OCI Console Extensions | Custom dashboard |

| API Consumers | Any client via REST | SDK-based integration |

Architecture Decision: APEX vs. Custom

Plane 2: Ingress & Policy Plane

Responsibility: Security boundary, traffic management, tenant governance

Why This Plane Fails First:

- Agents making tool calls can generate 10-100x the API traffic of a simple chat

- Token costs can explode without per-tenant quotas

- Unauthenticated endpoints become prompt injection vectors

OCI Service Mapping:

| Component | OCI Service | Configuration |

|---|---|---|

| Edge Protection | OCI WAF | Rate limiting, geo-blocking, bot mitigation |

| API Management | OCI API Gateway | AuthN/Z, request transformation, throttling |

| Identity | OCI IAM + Identity Domains | OIDC, SAML, MFA enforcement |

| Secrets | OCI Vault | API keys, model credentials, encryption keys |

| DNS & CDN | OCI DNS + Edge Services | Global routing, caching static assets |

Reference Architecture: Multi-Tenant API Layer

Critical Controls:

| Control | Implementation | Why It Matters |

|---|---|---|

| Per-Tenant Token Budget | API Gateway + Logging Analytics | Prevents one tenant from consuming all model capacity |

| Request Signing | OCI Vault + API Gateway | Prevents replay attacks and request tampering |

| Prompt Injection Detection | WAF Rules + Custom Functions | First line of defense against adversarial inputs |

Plane 3: Orchestration Plane

Responsibility: Agent runtime, workflow control, state management, tool routing

The Core Decision: Managed Agents vs. Framework Agents

OCI Service Mapping:

| Component | Managed (Agent Platform) | Framework (LangGraph) |

|---|---|---|

| Runtime | OCI AI Agent Platform | OKE + Container Instances |

| State | Built-in | Redis on OCI / Autonomous JSON |

| Memory | Built-in sessions | Custom implementation |

| Tools | Pre-built connectors | MCP servers on Cloud Run |

| Scaling | Automatic | HPA + KEDA |

Orchestration Patterns on OCI (Detailed in Part 2):

| Pattern | OCI Implementation | Use Case |

|---|---|---|

| Sequential | Agent Platform workflow | Document processing pipeline |

| Concurrent | OKE parallel pods | Multi-perspective analysis |

| Handoff | Agent-to-agent routing | Customer service escalation |

| Orchestrator-Worker | Parent agent + child agents | Complex research tasks |

| Human-in-Loop | APEX approval UI + agent | High-stakes decisions |

Plane 4: Data & Retrieval Plane

Responsibility: Knowledge access, vector search, structured data, document processing

Oracle's Differentiator: Native Vector Search in Oracle Database 23ai

Unlike bolt-on vector stores, Oracle Database 23ai provides:

- Unified data model: Vectors alongside relational data

- ACID transactions: Vector operations in the same transaction as business data

- Enterprise security: Row-level security applies to vector content

- SQL integration:

VECTOR_DISTANCE()in standard SQL queries

OCI Service Mapping:

| Component | OCI Service | Alternative |

|---|---|---|

| Document Storage | Object Storage | None needed |

| Processing | OCI Data Integration / Functions | Custom on OKE |

| Embedding | OCI Generative AI (Cohere Embed) | Self-hosted models |

| Vector Store | Oracle Database 23ai | OpenSearch (if multi-cloud) |

| Structured Data | Autonomous Database | MySQL HeatWave |

| Graph Data | Oracle Property Graph (23ai) | None comparable |

The Select AI Pattern (Zero-Hallucination SQL):

-- Natural language query translated to SQL

SELECT AI 'Show me top 10 customers by revenue this quarter'

FROM customers, orders

WHERE order_date >= '2026-01-01';

-- Model generates SQL, executes against actual data

-- No hallucination possible: result is from real database

Plane 5: Model Plane

Responsibility: LLM endpoints, model selection, fine-tuning, inference optimization

OCI Generative AI: Model-Agnostic Enterprise AI

Enterprise Security Controls:

| Control | Implementation |

|---|---|

| Data Residency | Sovereign Cloud regions (UK, EU, US Gov) |

| Network Isolation | Private endpoints, no public internet |

| Encryption | Customer-managed keys (OCI Vault) |

| Access Control | Fine-grained IAM policies per model |

| Audit | All API calls logged to Logging Analytics |

Plane 6: Operations & Governance Plane

Responsibility: Observability, evaluation, CI/CD, audit, lifecycle management

Why This Plane Is Non-Negotiable:

- AI systems behave non-deterministically

- Quality degrades silently without evaluation

- Compliance requires complete audit trails

- Debugging multi-agent systems requires distributed tracing

OCI Service Mapping:

| Component | OCI Service | Integration |

|---|---|---|

| Logging | OCI Logging + Logging Analytics | Ingest all agent/model traffic |

| Tracing | OCI APM | OpenTelemetry instrumentation |

| Metrics | OCI Monitoring | Custom metrics + dashboards |

| Alerting | OCI Notifications | Slack, PagerDuty, email |

| Audit | OCI Audit | Immutable compliance records |

| CI/CD | OCI DevOps | Model deployment pipelines |

Complete OCI Service Mapping

One-Page Reference: All Six Planes

Multi-Cloud Positioning: When to Choose OCI

| Decision Factor | Choose OCI When | Consider Alternatives When |

|---|---|---|

| Data residency | Sovereign Cloud regions required | Global-only workloads OK |

| Database integration | Oracle DB is source of truth | Greenfield with no Oracle |

| Model flexibility | Need multi-provider models | Committed to single vendor |

| Enterprise security | Strict compliance (HIPAA, FedRAMP) | Startup-scale security OK |

| Cost predictability | Dedicated AI clusters preferred | Variable pay-per-token OK |

What's Next

Part 2: Agent Orchestration Patterns — Six orchestration patterns mapped to OCI services, with decision criteria for each.

Part 3: The Operating Model — Evaluation pipelines, CI/CD for AI, incident response, and the maturity roadmap.

FAQ

Q: What's the minimum viable production architecture on OCI?

VCN with private subnets + OCI WAF + API Gateway + OCI AI Agent Platform (or OKE running LangGraph) + OCI Generative AI hosted models + Autonomous Database 23ai + Logging Analytics + APM.

Q: How does OCI compare to AWS Bedrock or Azure OpenAI?

OCI differentiates on: (1) native database integration with 23ai vector search, (2) model-agnostic multi-provider approach, (3) sovereign cloud regions for strict data residency, (4) dedicated AI clusters for isolation and predictable costs.

Q: Can I use open-source frameworks like LangGraph on OCI?

Yes. Deploy LangGraph on OKE, use OCI Generative AI as the model provider, and OCI services for all surrounding infrastructure. Part 2 details this pattern.

Q: What about cost management for unpredictable agent workloads?

Implement per-tenant token budgets at the API Gateway, use dedicated AI clusters for predictable costs, and set up cost alerts in OCI Cost Management. Part 3 covers operational cost controls.

This is Part 1 of a 3-part series on production LLM and agentic AI systems on OCI.

Sources:

- Microsoft Agent Framework Design Patterns

- Google Cloud Agent Design Patterns

- OCI Generative AI Documentation

- Oracle Database 23ai Vector Search

Related Articles

- Part 2: Agent Patterns — Implementation patterns for production agents

- Part 3: Operating Model — Day-2 operations and governance

- Oracle GenAI Agents vs LangGraph vs CrewAI — Framework comparison

Related Research

Read on FrankX.AI — AI Architecture, Music & Creator Intelligence

Weekly Intelligence

Stay in the intelligence loop

Join 1,000+ creators and architects receiving weekly field notes on AI systems, production patterns, and builder strategy.

No spam. Unsubscribe anytime.